AWS vs GCP: Which Cloud Service Logs Can Provide the Most Valuable Data to Improve Your Business

The infrastructure and services running on public cloud computing services like Google Cloud Platform (GCP) and Amazon Web Services (AWS) produce massive volumes of logs every day. An organization’s log data provides details about their entire IT environment in real-time, or at any point in time in history. Cloud services logs often contain details on machine and network traffic, user access, changes to applications and services, and countless other pointers used to monitor the health and security status of the IT landscape.

Cloud log analysis systems allow users to extract intelligence from log data by running simple searches, complex queries, conducting trend research, and building data visualizations. Beyond cloud infrastructure, applications and platform resources produce their own logs, which adds to the sheer volume of data DevOps teams need to analyze on a regular basis.

Some of the most common use cases for log analytics include security, IT monitoring, business intelligence and analytics, and business operations tasks. Knowing the basics of which cloud logs to watch and why can help teams search for potential infrastructure or security issues, improve stability and reliability, understand cloud resource allocation, and identify trends that can lead to important business improvements. Let’s dive into the top GCP and AWS logs to watch, and what they will show you.

Read: How Log Analytics Powers Cloud Operations: Three Best Practices for CloudOps Engineers

Top cloud services log files for AWS

Monitoring and analyzing activity within your AWS account can be challenging. That’s why AWS offers AWS CloudTrail, which monitors events for your account, delivering them as log files to your Amazon Simple Storage Service (S3). The AWS CloudTrail auditing service is an API logging tool that stores a record for every service call that occurs anywhere within your AWS account. CloudTrail provides complete visibility into the activity that occurs within your account, enabling you to see exactly who did what, when, and where.

Learn how to enhance your CloudTrail log analysis with ChaosSearch.

Amazon identifies four different categories of CloudTrail logs that can be useful for DevOps teams.

- Identity and access management (IAM) logs allow teams to monitor how users access the system, as well as understand user permissions for sensitive data. This capability is useful in any organization, but particularly within highly regulated environments.

- Error code and message logs can be used to troubleshoot issues. For example, if someone uses the AWS command-line interface (CLI) to make a call to update a trail with a name that does not exist, an error message will appear.

- CloudTrail Insights event logs are actually a pair of events that mark the start and end of a period of unusual write management API activity or error response activity. These logs can help your team identify and respond to unusual activity.

- Amazon Elastic Compute Cloud (Amazon EC2) provides resizable computing capacity in the AWS Cloud. By navigating EC2 logs, you can launch virtual servers, configure security and networking, and manage storage. Since Amazon EC2 can automatically scale up or down to handle spikes in server traffic, these logs can help your team understand how auto-scaling works, and look for opportunities to optimize resources.

In addition to CloudTrail and EC2, other cloud services offered, such as Route 53, generate logs. Amazon Route 53 logs, for example, provide DNS query logging and the ability to monitor your resources using health checks. These resources are important to check because malicious actors often execute attacks such as distributed denial of service (DDoS) to block legitimate server traffic, or DNS tunneling to exploit the system with malware.

Top categories of GCP cloud-based log data

Like AWS logs, GCP logs are attached to specific Google cloud services, and can help your team understand how these services are operating in your environment. In addition, these logs can help you debug or troubleshoot issues. This full index of GCP services that generate logs provide ideas and examples of the types of GCP logs you may encounter.

Other common categories of Google Cloud logs include:

- User-written logs, which contain information related to a user’s custom applications or services. These logs are typically written to Google Cloud Logging using the logging agent, the Cloud Logging API, or the Cloud Logging client libraries. User-written logs can help your team identify potential issues with custom applications, or how these applications are interacting with your infrastructure.

- Component logs, which are a hybrid between GCP-generated and user-written logs. For example, software components generate logs that might provide a service to the users running the component. These logs or their metadata are helpful for third parties to provide user support for their components, as well as for internal DevOps teams to troubleshoot issues.

- Cloud security logs, such as cloud audit logs and access transparency logs. Cloud audit logs show activity on each cloud project, folder, and organization including admin activity, data access, system event and policy denied logs. These can be used for similar purposes to AWS IAM logs. Access transparency provides you with logs of actions taken by Google staff when accessing your Google Cloud content. These logs can provide a paper trail for legal and regulatory compliance requirements.

AWS vs. GCP: Which provides the best cloud logs service?

When it comes to log analysis, AWS and GCP both offer powerful tools and services, each with its own set of features, advantages, and limitations. Here, we’ll compare AWS and GCP based on several key aspects of log analysis to help you decide which platform might be better suited for your needs.

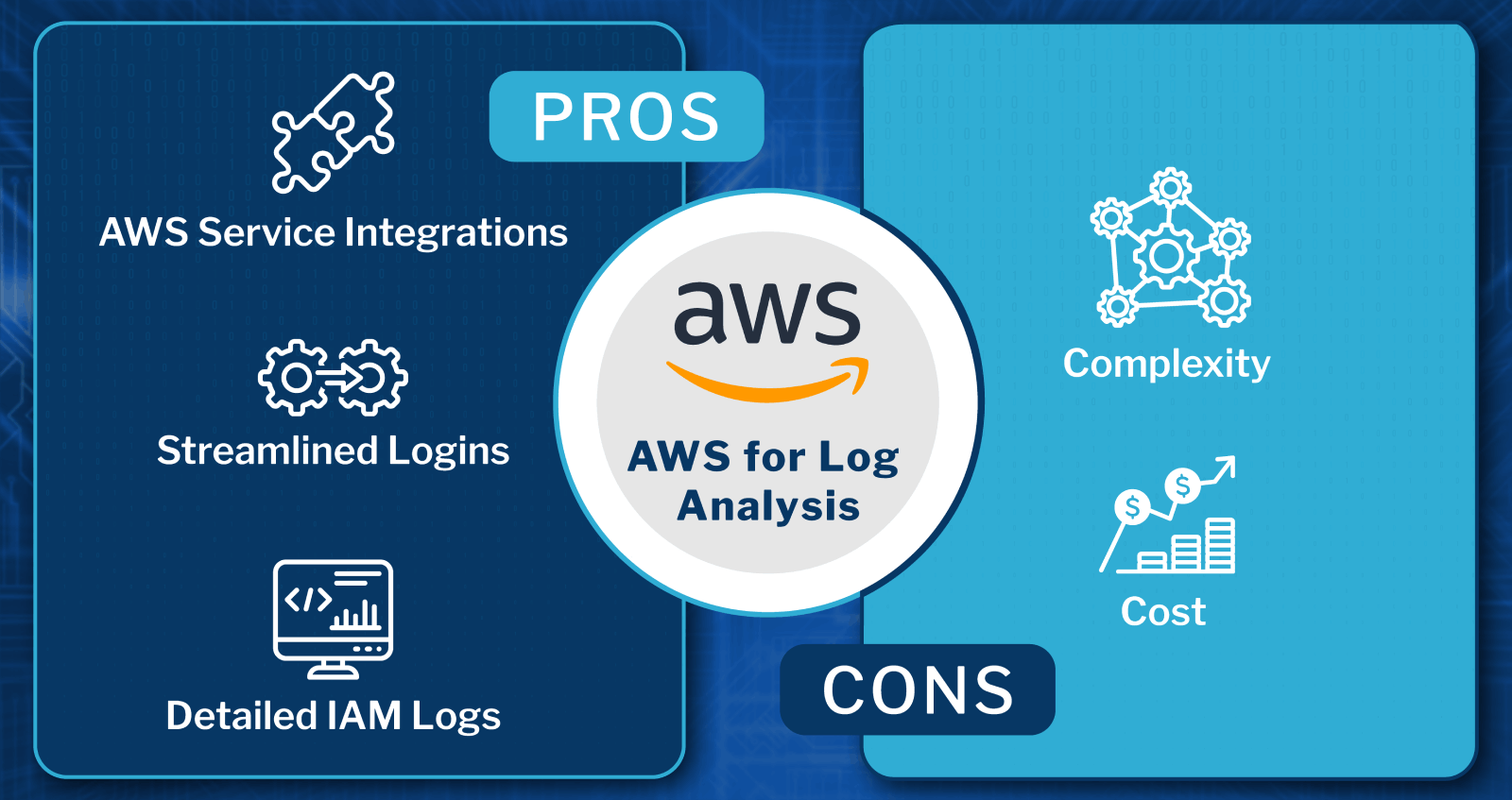

AWS for log analysis

Pros:

- Comprehensive Logging Services: AWS provides a range of logging services like CloudTrail, CloudWatch Logs, and VPC Flow Logs. These services cover a wide spectrum of logging needs from API calls to network traffic.

- Integration with Other AWS Services: AWS logging services integrate with other AWS tools such as Lambda, S3, and Redshift, enhancing your ability to automate log analysis and storage.

- Detailed IAM Logs: CloudTrail provides extensive logs on Identity and Access Management (IAM) activities, which are crucial for monitoring user permissions and actions in highly regulated environments.

Cons:

- Complexity: AWS offers a plethora of logging tools which can be overwhelming and complex to configure and manage. For example, CloudWatch is known to have a complex user interface.

- Cost: The cost of log storage and analysis can quickly escalate, especially with the high volume of logs generated by large environments. Some services, such as CloudWatch, face limitations on retention, which can be difficult for examining long-term trends. It is possible to reduce AWS log costs by keeping data in place (we’ll discuss this more later).

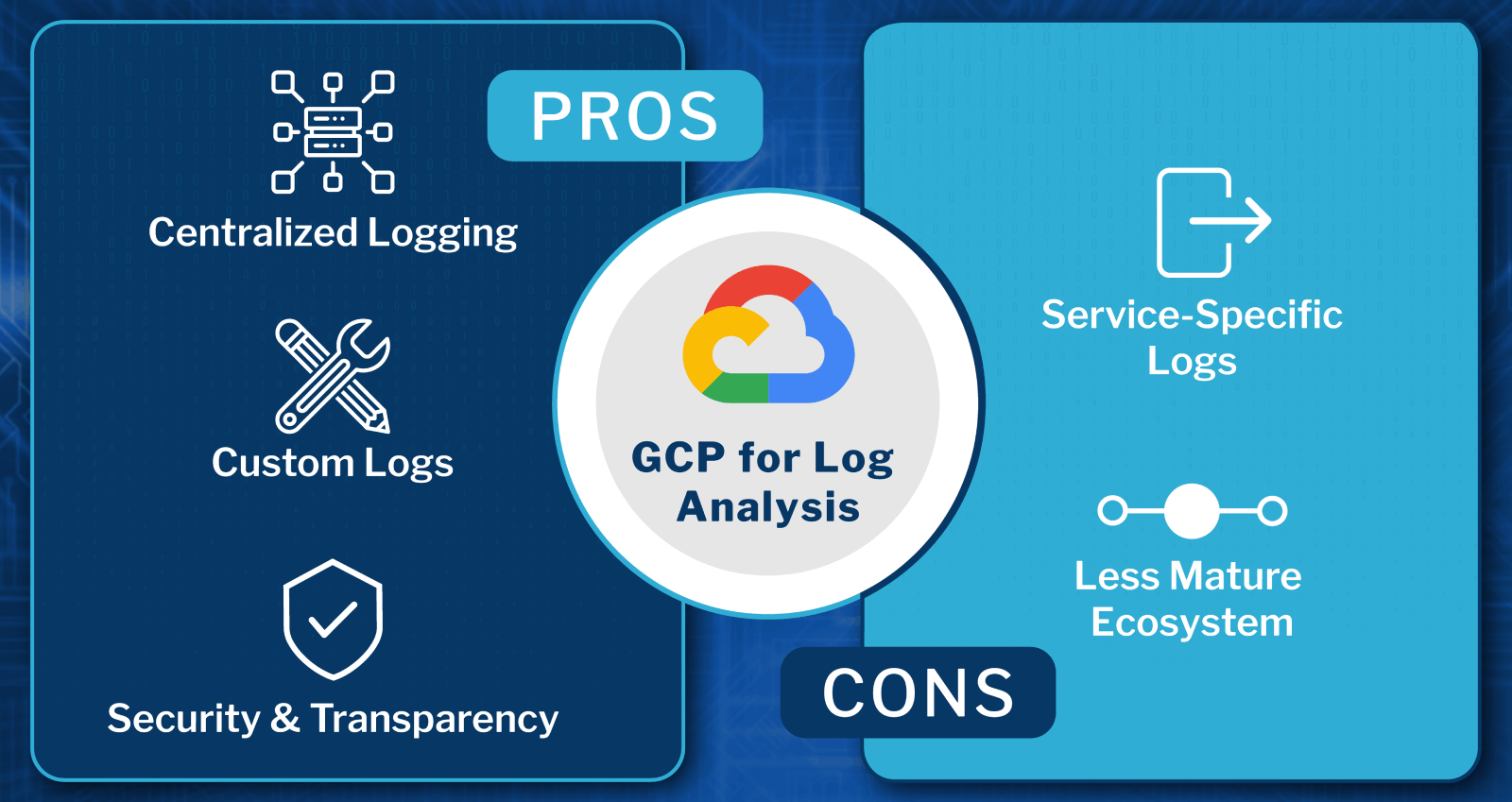

GCP for Log Analysis

Pros:

- Centralized Logging: Google Cloud Logging provides a centralized platform for managing logs from various GCP services, making it easier to search, filter, and analyze logs.

- Custom Logs: GCP supports user-written logs, which allow you to log custom application data directly into the logging system using client libraries or APIs.

- Security and Transparency: GCP's Cloud Audit Logs and Access Transparency logs offer detailed insights into admin and data access activities, aiding in compliance and security monitoring.

Cons:

- Service-Specific Logs: While GCP's logging services are robust, they can be more fragmented and service-specific compared to AWS, potentially requiring more effort to aggregate and analyze logs from different services.

- Less Mature Ecosystem: Although GCP's logging capabilities are strong, the ecosystem and community support around log analysis might not be as mature as AWS.

In conclusion, the choice between AWS and GCP for log analysis will largely depend on your specific requirements, the existing cloud infrastructure, as well as your team's familiarity with each platform and their pricing models. Both platforms offer robust logging solutions, but AWS is generally favored for its extensive integration and comprehensive logging tools, while GCP stands out for its centralized approach and strong security features. For both platforms, a third-party log management solution like ChaosSearch can help simplify cloud logging, relying on low-cost cloud object storage to simplify the pricing structure.

Get an overview of GCP cloud logging in this one-minute video.

Top cloud services log management strategy

While the types of logs and their purposes might seem similar across cloud platforms, the sheer volume of cloud services logs can make it difficult for teams to fully understand what’s happening. Each day, the average enterprise’s cloud applications, containers, compute nodes, and other components can throw off thousands or even millions of logs. This gets even more challenging with serverless log management.

Cloud operations (CloudOps) teams use these logs to maintain stability, optimize performance, control costs, and govern data usage. The insights provided by cloud services logs gives CloudOps teams the data needed to respond to events quickly and accelerate root cause analysis. Typically, these teams use a centralized log management strategy, especially if the organization operates within a multi-cloud environment.

A centralized log analytics platform can abstract away complexity from cloud services logs, helping teams to improve the stability and agility of their cloud environments in the shortest amount of time. However, most organizations face challenges of log retention and cost using typical log management solutions such as Elasticsearch. As these systems scale, they can become both brittle and expensive to maintain.

As a result, organizations often must make a cost vs. retention tradeoff, leaving gaps in the data available for analysis. This can be harmful for long-term trend analysis, or the discovery of advanced, persistent security threats. To address these concerns, many teams are rethinking their enterprise data architectures so they don’t need to make these difficult retention decisions.

A cloud data platform like ChaosSearch can help you leverage existing, low-cost cloud object storage such as Amazon S3 or Google Cloud Platform, by making it searchable for analytics in place. You can even use ChaosSearch to optimize your AWS Data Lake cost-effectively.

Using these platforms, teams can conduct cloud log analysis without having to transform or move data – or rely on data engineering teams to create complex ETL pipelines for them. This creates faster time to insights and helps fill important security and compliance gaps.

Want to learn more about how to cost-effectively analyze your cloud logs?

Get the eBook: How to drive observability cost savings without sacrifices.